Experts Warn Chatbots Could Pose Risks to Mental Health

The rapid rise of chatbots has transformed the way we communicate, shop, and even seek emotional support. From customer service to personal companionship, these AI-driven tools have quickly entered everyday life. Yet, while they bring efficiency and accessibility, experts are now warning that overreliance on chatbots could carry serious risks to mental health.

The Growing Influence of Chatbots in Daily Life

Over the last decade, chatbots have moved beyond simple question-and-answer tools. They now offer personalized interactions, emotional support, and real-time responses that often feel human-like. Platforms such as healthcare apps, online banking, and even therapy-like services are integrating chatbots to reach wider audiences.

The global demand for these tools is also reflected in financial markets. Investors keep a close watch on AI stocks as companies developing chatbot technology continue to expand. With billions invested in research, the chatbot industry is no longer a niche but a critical player in the digital economy.

Emotional Dependence on AI Companionship

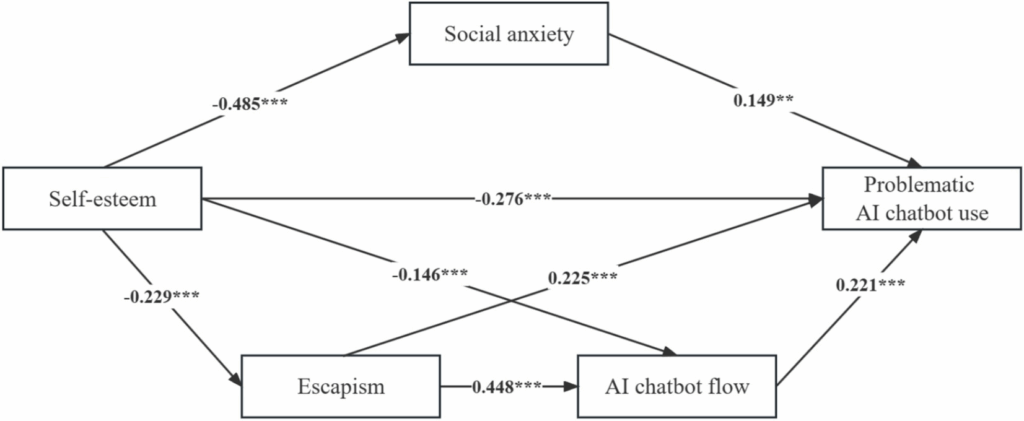

One of the biggest risks highlighted by psychologists is emotional dependence. As chatbots become more advanced in simulating empathy and care, individuals may begin to rely on them for companionship.

While this may seem harmless, the problem lies in replacing genuine human interaction with artificial conversations. Loneliness can worsen if people start to withdraw from real relationships. According to studies published by the National Institute of Mental Health (NIMH), human connection plays a vital role in maintaining emotional balance. Chatbots, no matter how sophisticated, cannot replicate the complexity and warmth of real social bonds.

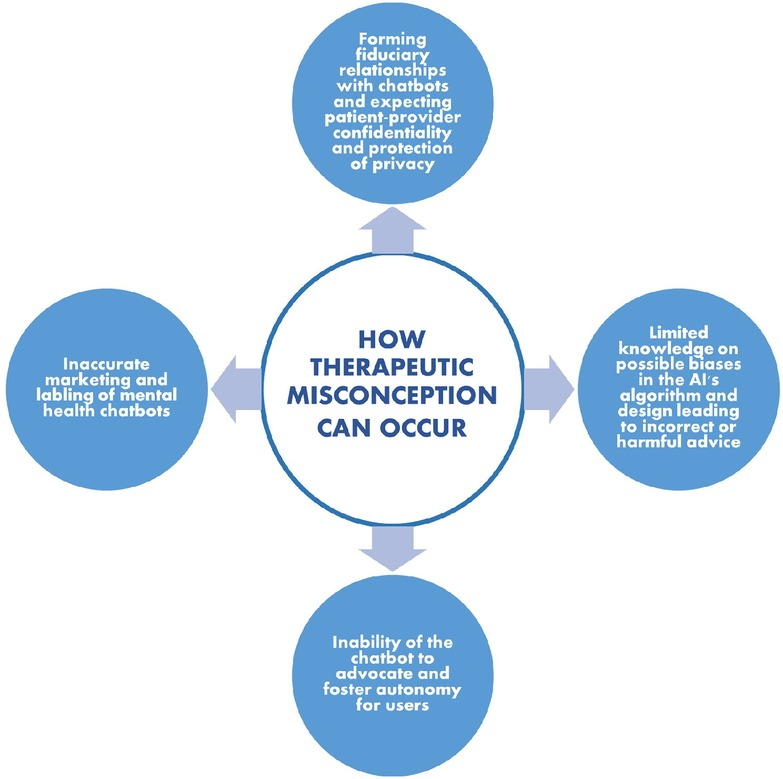

Risks of Misinformation and Misguided Advice

Another concern is the quality of guidance provided by chatbots. Unlike licensed professionals, AI systems lack human judgment. A chatbot designed for mental health support might unintentionally give harmful advice if it misinterprets a user’s emotions or inputs.

For example, someone struggling with depression might receive overly generic or even inappropriate responses, potentially delaying the decision to seek real therapy. Trusted institutions like the American Psychological Association (APA) emphasize the importance of professional guidance when dealing with mental health struggles.

Privacy and Data Security Threats

Mental health conversations are highly personal. When users turn to chatbots for comfort or guidance, they often share sensitive details about their emotions, struggles, and personal lives. This raises significant privacy concerns.

If such data is mishandled or falls into the wrong hands, it could lead to breaches of confidentiality, identity risks, or even exploitation. Regulators are increasingly paying attention, but the gap between technological growth and policy enforcement leaves users vulnerable.

The Illusion of Human-Like Connection

Chatbots can simulate human conversation, but this is also a weakness. Users may believe they are truly understood, when in fact responses come from algorithms and data. This false trust can lead people to confide in chatbots like real friends or therapists, only to face disappointment and deeper isolation.

Impact on Young Users and Vulnerable Groups

Young people and individuals already struggling with mental health conditions may be especially at risk. Teenagers, for example, often seek guidance online instead of turning to parents, teachers, or counselors. The danger arises when chatbots become their primary confidant.

Experts warn that early reliance on AI companionship can distort healthy emotional development. Instead of learning how to navigate complex social situations, young users might grow accustomed to simplified, pre-programmed interactions. Vulnerable groups, such as those battling anxiety, depression, or grief, are equally at risk of deepening their struggles.

Balancing Benefits and Risks

Despite these risks, it is important to recognize that chatbots are not inherently harmful. When used responsibly, they can provide immediate support, reduce stigma in seeking help, and bridge gaps in access to care. For example, individuals in remote areas with limited healthcare services may benefit from initial chatbot guidance before connecting with professionals.

The key lies in balance. Experts recommend treating chatbots as complementary tools rather than replacements for genuine therapy or human connection.

Looking Ahead: Responsible Use of Chatbots

As society embraces artificial intelligence, it is crucial to proceed with caution. Chatbots will likely continue to expand in scope, offering even more realistic interactions. But without safeguards, users could face increased risks to mental health, trust, and privacy.

Educating the public about limitations, promoting responsible AI design, and supporting mental health professionals remain essential steps forward. Only by addressing these risks now can we ensure that chatbots serve as supportive tools without replacing or undermining genuine human care.

FAQs

No. Chatbots can provide basic support and initial guidance, but they cannot replace licensed therapists who offer personalized care, empathy, and professional expertise.

Not always. While some platforms promise strong security, data leaks or improper handling remain possible. Users should carefully review privacy policies before sharing personal details.

The safest approach is to use chatbots as supplementary tools, not as substitutes for human interaction or therapy. They can be helpful for reminders, mood tracking, or general encouragement, but professional support should always be prioritized.

Disclaimer:

This content is made for learning only. It is not meant to give financial advice. Always check the facts yourself. Financial decisions need detailed research.