Global Outage hits major companies with 503 errors, disrupting servers worldwide

On 20 October 2025, the internet reminded us how fragile it can be. Major platforms like Canva, Medium, Snapchat, and even cloud-powered tools suddenly stopped working. Users were hit with “503 Service Unavailable” errors around the world. It wasn’t just one website; it was a digital chain reaction. The issue traced back to Amazon Web Services (AWS), one of the biggest cloud providers powering thousands of apps and websites.

As AWS struggled, servers overloaded, and platforms went dark. People couldn’t design, post, play, or even access internal business tools. Companies faced interruptions, creators lost work, and businesses panicked as critical systems failed.

This outage showed one important truth: when the cloud goes down, the world slows down. Our digital life depends on a few major providers, and one glitch can cause global disruption within minutes.

This article explores what caused the outage, who was affected, and why this event should be a wake-up call for both users and businesses.

Technical Snapshot: What Failed and Why it Rippled?

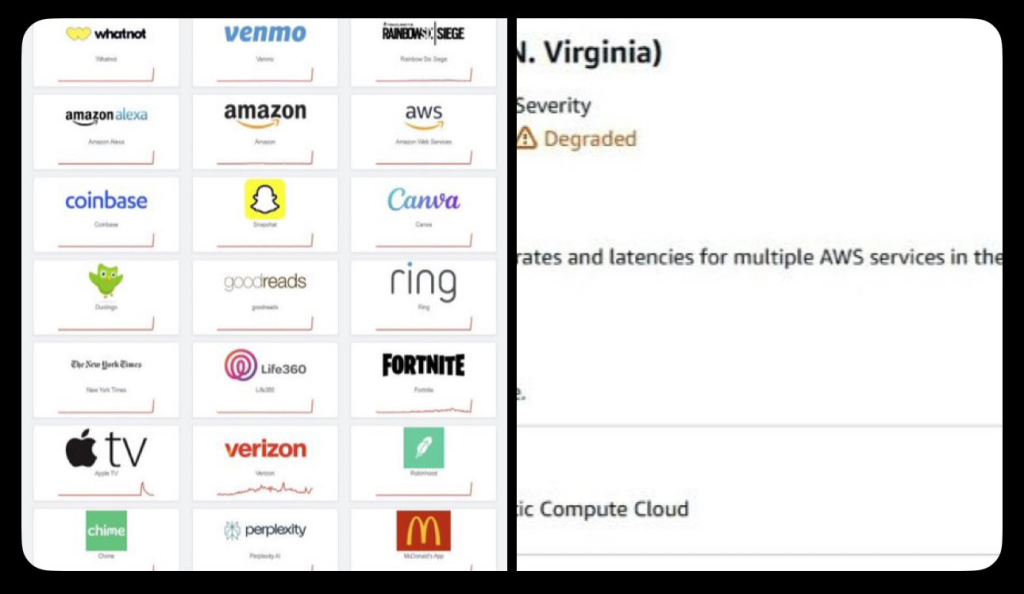

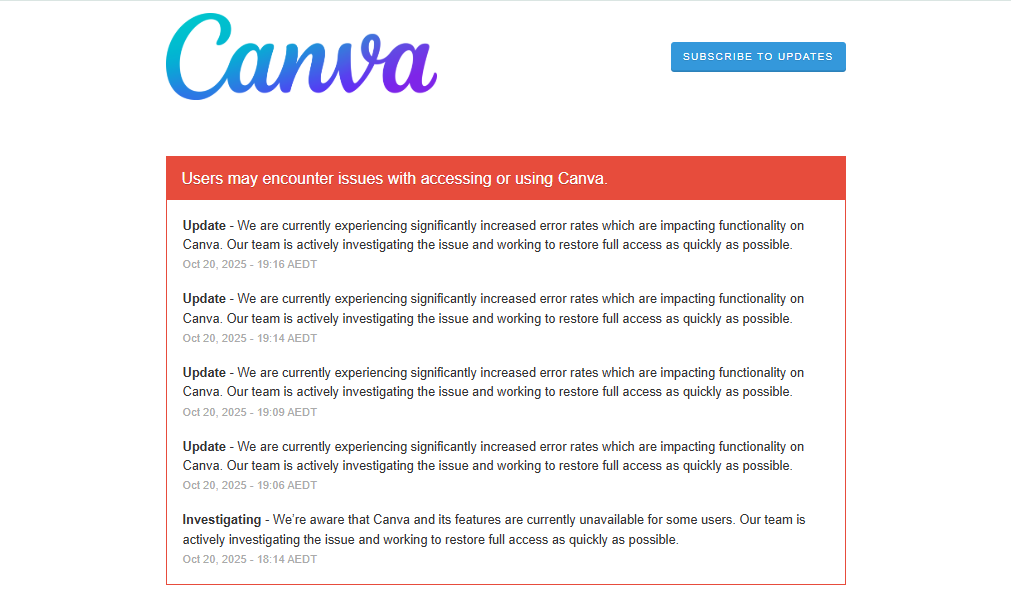

On 20 October 2025, multiple services returned HTTP 503 (Service Unavailable) errors. The visible symptom was simple. Pages would not load. APIs timed out. Users saw “service unavailable” messages. Investigations quickly pointed to Amazon Web Services. AWS reported increased error rates and latency in its US-EAST-1 region. That single region hosts a huge number of backend systems. When core services lag, many dependent apps struggle or stop working. The AWS status updates confirmed the incident and ongoing mitigation efforts.

Companies built on top of cloud components felt the shock. Real-time apps, design platforms, voice assistants, and gaming backends all reported failures or degraded performance. The outage not only affected consumer sites. It also impacted business tools and internal dashboards that depend on the same cloud services. Early reports named Canva, Snapchat, Fortnite, Perplexity, Airtable, and others among those hit.

How did a 503 Become a Global Problem?

A 503 error happens when a server cannot handle a request. That can mean overload, misconfiguration, or an unavailable backend. In cloud setups, many apps rely on managed databases, queues, and storage.

When one core service has trouble, the downstream servers queue up requests. Latency rises. Error rates climb. The result is a cascade of failures across different clients and regions. This cascading effect explains why a problem that begins in a single data-center cluster can appear global within minutes.

Immediate Impact on Users and Businesses

Millions of users faced interruptions. Creators could not save or export designs. Teams lost access to shared documents. Gamers were booted from matches. Voice assistants failed to respond. Small businesses and marketing teams that run social campaigns found themselves unable to meet deadlines. For companies, the outage meant lost productivity and potential revenue. For many teams, the timing was critical; scheduled campaigns, deadlines, and live operations rely on near-constant uptime.

Canva’s own status page logged an unresolved incident on Oct 20, 2025, while outage trackers showed a rapid spike in user reports. Downdetector and similar sites recorded thousands of alerts within minutes of the first failures. Public channels are filled with screenshots and messages from frustrated users.

Market and Operational Consequences

Cloud outages affect investor sentiment for both cloud providers and large platform customers. Trading desks and analysts monitor such events. Some traders used tools such as an AI stock research analysis tool to quickly assess short-term risk exposure for cloud-reliant firms. Beyond stocks, corporate risk teams must activate incident playbooks to protect customers and maintain operations.

Operationally, the outage exposed weak points in dependency graphs. Many businesses documented how single-region dependencies caused service degradation. Engineering teams scrambled to switch traffic, scale fallback systems, or toggle degraded modes. For some companies, the lack of a fast failover plan translated to hours of downtime.

Public Reaction and Real-time Signals

Social platforms became the primary place for live updates. Engineers and product leaders posted status notes. Independent trackers and users shared error screenshots. A popular feed showed a grid of affected brand logos, highlighting the broad impact across entertainment, productivity, and commerce.

Other service accounts, like small SaaS providers, confirmed sporadic errors for their customers. These public reactions helped people map the outage’s reach before full official post-mortems appeared.

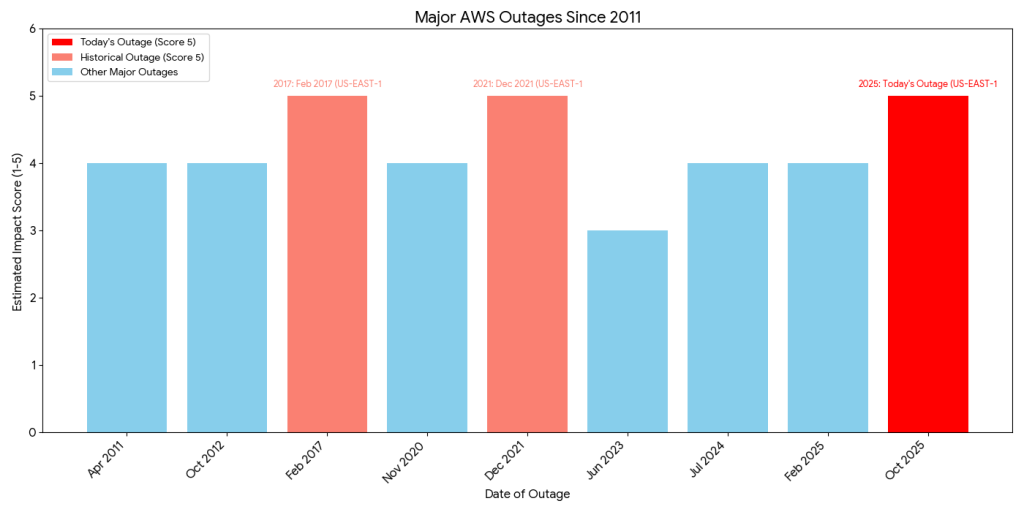

Why does this Outage Matter? The Structural Problem

Cloud concentration is the core issue. A handful of cloud providers host the backbone of modern apps. When a major provider has trouble, many unrelated services feel the effect. The convenience of managed cloud services comes with a centralization risk. Multi-region redundancy and cross-cloud strategies can reduce exposure. But such architectures add cost and complexity. Smaller firms rarely have the budget or expertise to implement full, multi-cloud redundancy.

This event also highlights dependency on managed services like DynamoDB, S3, or ELB. When those building blocks fail or slow down, the stack above them inherits the fault. The result is systemic fragility at the planetary scale.

Short-term Fixes and Long-term Lessons

Short term, engineers routed traffic away from the affected region where possible. Providers worked to restore core services and reduce error rates. Affected companies posted status updates and asked customers to wait while teams applied fixes. For users, the practical steps were simple: save work offline when possible, check official status pages, and use alternative tools if available.

Long term, businesses must rethink resilience. That includes better failover plans, region-agnostic design, and testing of outage scenarios. Transparency matters too. Clear communication during incidents reduces customer frustration. Regulators and enterprise customers may also press for stronger SLAs or clearer incident reporting after post-mortems are released.

What to Watch Next?

Watch the AWS Health Dashboard and official status pages for a final root-cause statement and timeline. Look for post-incident reports from affected platforms. These reports will show how much traffic shifted, which components failed, and which mitigations worked.

Industry commentators and technical leads will publish analyses in the hours and days after restoration. Regulators or large enterprise customers may ask for more formal reviews if the outage caused major business losses.

Concluding Note

The outage on 20 October 2025 is a clear reminder. Digital services appear seamless until the infrastructure behind them slips. Companies must balance efficiency with resilience. Users should expect occasional failures and keep backups of critical work. The cloud is powerful. It is also centralized. That combination makes modern outages both dramatic and instructive

Frequently Asked Questions (FAQs)

On 20 October 2025, many websites like Canva and Snapchat showed 503 errors. This happened because their servers, hosted on AWS, were overloaded or temporarily unavailable, stopping normal access for users.

Yes, on 20 October 2025, AWS reported issues in its US‑East‑1 region. This caused slow responses and errors for many websites and apps that rely on AWS cloud services globally.

A single AWS region powers thousands of apps. When it failed on 20 October 2025, many platforms relying on the same servers went down, creating a global ripple effect.

Disclaimer: The above information is based on current market data, which is subject to change, and does not constitute financial advice. Always do your research.