Nvidia: Why Its Biggest Tech Clients Could Become Its Strongest Rivals

In 2024, Nvidia became one of the most valuable companies in the world by powering the AI boom. Its high-performance GPUs fuel tools like ChatGPT, autonomous cars, and cloud services. Tech giants such as Microsoft, Amazon, Google, and Meta depend on Nvidia to run their largest AI models. In fact, most AI training today happens on Nvidia hardware.

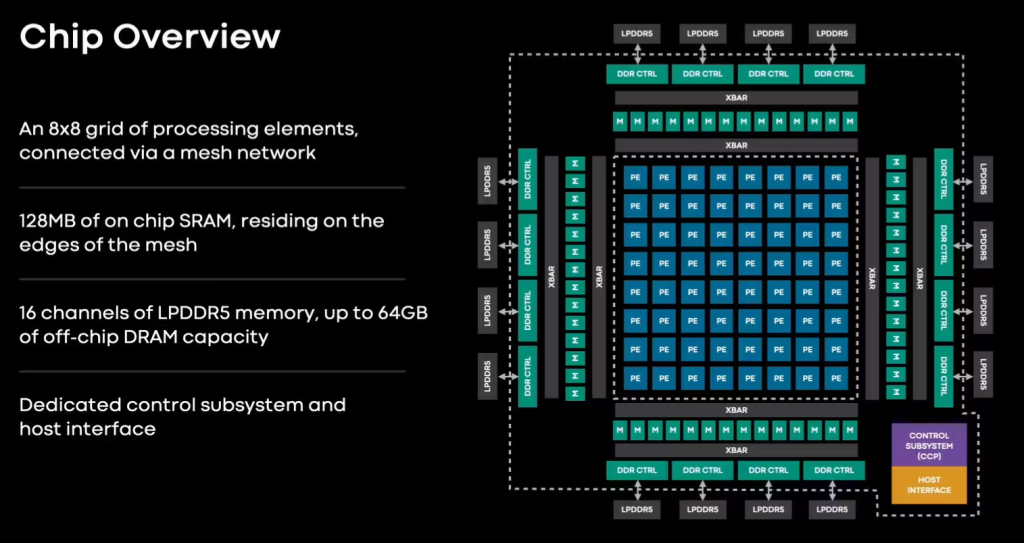

But there is a major twist. These same clients are now building their own custom AI chips. Why? Because, Nvidia’s chips are expensive, in high demand, and give Nvidia too much control over the AI supply chain. Big Tech wants lower costs, more speed, and full control of its data. So in late 2023 and 2024, companies like Microsoft (Maia), Amazon (Trainium), and Google (TPU) started rolling out in-house chips.

This shift could turn Nvidia’s biggest customers into serious rivals. The AI hardware war is no longer about one company. It is about who will control the future of intelligence, and the battle has already begun.

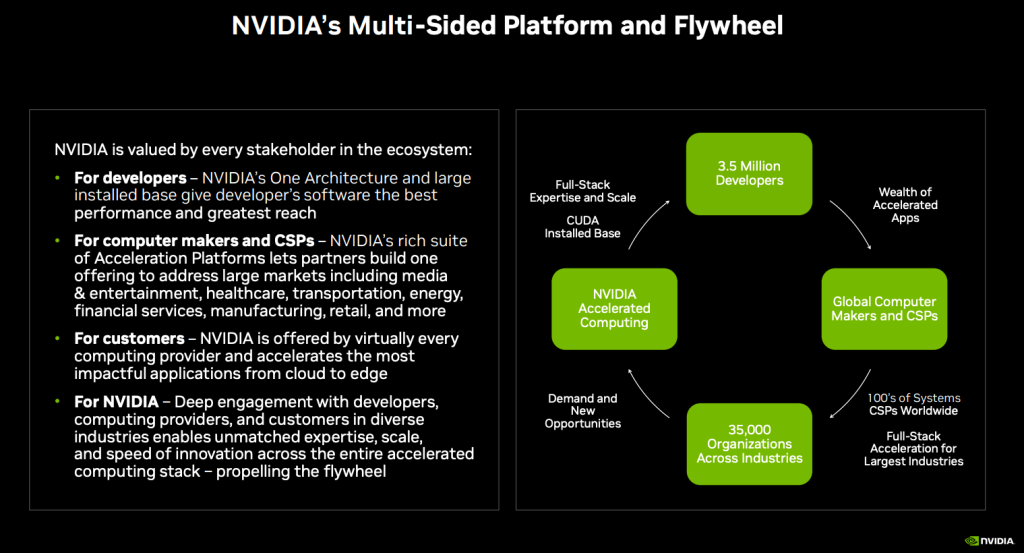

Nvidia’s Lead and Ecosystem Advantage

Nvidia spent years building both chips and software. Its GPUs paired with CUDA became the default for AI research and cloud training. That gave Nvidia a strong lock on developers and data centers. The result: many firms built their AI stacks around Nvidia gear. This made Nvidia hard to replace for high-end training.

Why Big Tech Still Buys Nvidia?

Top cloud firms use Nvidia for scale and speed. The hardware works today. The tools and developer libraries save time. For many workloads, Nvidia remains the fastest and most reliable option. That is why companies kept buying, even as they looked for alternatives.

The Turning Point: Price, Control, and Risk

High-performance GPUs cost a lot. Supply tightness makes prices volatile. Firms worry about being dependent on one supplier. Some also want chips tuned to their models. These reasons pushed tech giants to design their own accelerators. The shift is strategic. It is about cost, performance, and control.

Big Tech’s In-house Chips

Google deployed Cloud TPU lines years ago. TPU v5e rolled out in 2023 and grew in cloud offerings. Google uses its TPUs to train large models at lower cost. That gave Google flexibility beyond off-the-shelf GPUs.

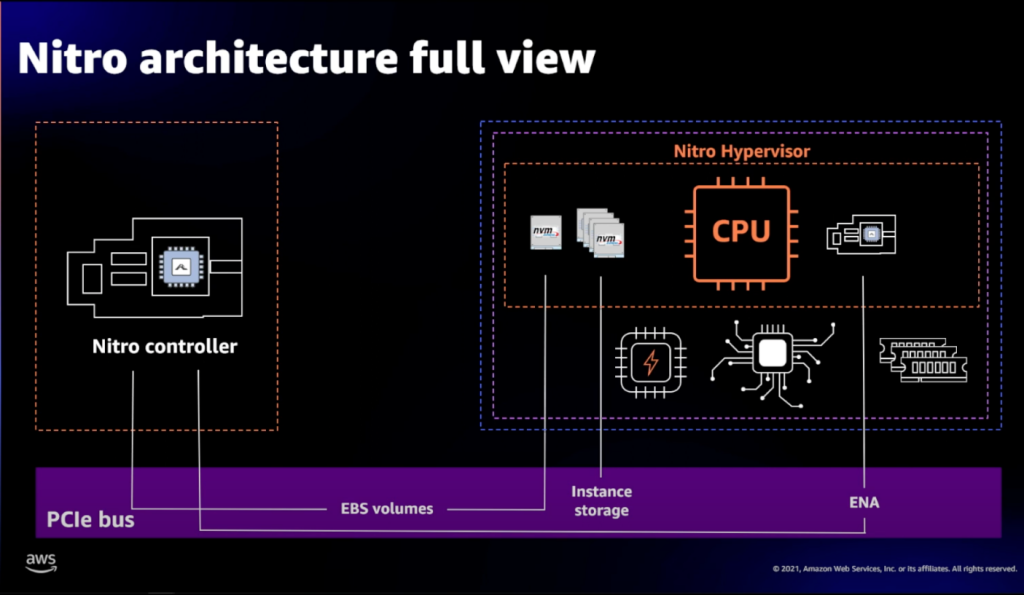

Amazon built Inferentia and Trainium chips for AWS. These chips aim to cut inference and training bills. In December 2024, Trainium2 and plans for newer nodes signaled deeper investment in custom silicon. AWS also lured big customers to anchor its stack.

Microsoft designed Maia to power Azure AI. Maia started in 2023 and expanded in 2024. Microsoft pairs Maia with its cloud systems and OpenAI work. This reduces the company’s reliance on third-party chips for some workloads.

Meta built MTIA chips to serve its social and recommendation workloads. Meta shared roadmaps showing large in-house deployments. The company cites cost reduction and model-chip co-design as core benefits. MTIA chips now run at scale across Meta’s services.

Tesla tried to build Dojo for self-driving training. The Dojo effort showed how a single company can chase very specific needs. Tesla’s path has been rocky, but it has proved the model of bespoke hardware for unique workloads.

Strategic Motives behind in-house Silicon

Control matters. Custom chips let firms tune computing for their models. That boosts efficiency. It lowers the total cost of ownership over time. Security and data privacy also play a role. Firms prefer to keep sensitive workloads inside their own stacks. Lastly, a custom chip can be a strategic moat. It makes services harder to copy.

Why is Replacing Nvidia so Hard?

Nvidia did not just build chips. It built an ecosystem. CUDA, optimized libraries, and partner integrations are big assets. Nvidia also keeps releasing new architectures. These include high-end designs that are hard to match. The company has strong partnerships with foundries like TSMC. That keeps it near the process node frontier. All this means rivals face a steep climb.

Nvidia’s Countermoves and Market Responses

Nvidia moved toward full systems. The company now offers complete stacks for AI. It pushes networking, DPUs, and AI-optimized servers. It also signed large commercial deals and investments with cloud and infrastructure partners in 2025. These steps try to lock in customers beyond chips. Recent market deals and partnerships show how aggressively Nvidia is defending its position.

Geopolitics and Market Fragmentation

Regulatory limits changed the market in 2025. Nvidia’s access to some markets, like China, dropped sharply. On October 19, 2025, Nvidia’s CEO noted market share in China fell to near zero because of export controls. This shift accelerated local chip development and encouraged domestic alternatives. That trend raises the chance of regional hardware ecosystems diverging.

Short, Medium, and Long-term Outlook

In the short term, Nvidia remains essential for top-tier training. Its chips are hard to match right away. In the medium term, expect growing competition for inference and specialized workloads. Cloud providers will use a mix of Nvidia GPUs and their own accelerators. In the long term, the market could fragment. Custom chips may capture large slices of demand. But Nvidia can pivot to systems and software to keep value. Evidence of this dynamic is visible across recent industry moves.

What does this mean for the AI Industry?

Competition will push prices down for many workloads. It will also spur innovation in chip design and model-chip co-design. The software landscape may become more fragmented. That could increase the cost of porting models across hardware. Customers and startups will face more choices. Smart investors and analysts might use tools such as an AI stock research analysis tool to track which firms win on both silicon and services.

Wrap Up

The client-to-rival shift is already underway. Big tech has real scale and real incentives to build chips. Nvidia still leads in many areas. But the market is evolving fast. The next few years will decide who controls AI compute. The winner will be the firm that marries hardware, software, and scale most effectively.

Frequently Asked Questions (FAQs)

Big tech firms want cheaper, faster, and more customized AI chips. Nvidia chips are costly and in high demand, so companies started building their own solutions in 2024.

In-house AI chips can compete in some tasks, especially for specific workloads. However, Nvidia still leads in high-end training because of its powerful GPUs and strong software tools.

Nvidia may lose some market share over the next few years. Big tech firms are growing their own chip programs, but Nvidia remains important for large AI projects as of 2025.

Disclaimer: The above information is based on current market data, which is subject to change, and does not constitute financial advice. Always do your research.