Prince Harry, Meghan Urge Halt to Superintelligence Development Amid AI Risks

On 22 October 2025, Prince Harry and Meghan Markle joined a wide-ranging group of scientists, tech leaders, and public figures in issuing a striking call: halt the development of “superintelligent” artificial intelligence until it can be proven safe.

They warn that AI systems capable of outthinking humans across almost every task may not just change our jobs and privacy, but challenge human control itself. The letter they signed makes clear: progress matters, yes, but not at the cost of our future.

In this moment of high-tech advance, their voice adds a new twist. It shows how questions about AI ethics, power, and responsibility are no longer confined to labs and boardrooms; they are part of our everyday world. The real question: as technology races ahead, can society keep up and steer it wisely?

About Superintelligence

Superintelligence means machines that can outthink humans in nearly every field. These systems could learn faster than people. They could improve themselves without human help. That makes them different from today’s narrow AI, which is good at single tasks.

Superintelligence raises unique risks. It could act in ways designers did not foresee. It could concentrate power in a few labs or governments. That is why scientists and ethicists debate it fiercely. Several high-profile warnings and past pause calls show the worry is real.

Prince Harry and Meghan’s Statement

On October 22, 2025, Prince Harry and Meghan Markle added their names to a short, forceful statement calling for a prohibition on developing superintelligent AI until it can be proven safe and enjoys broad public backing.

The statement was released alongside a long list of signatories from varied backgrounds. It argued that development should not proceed until there is a clear scientific consensus and public consent. Their involvement drew attention because it united celebrity influence with the technical and policy worlds.

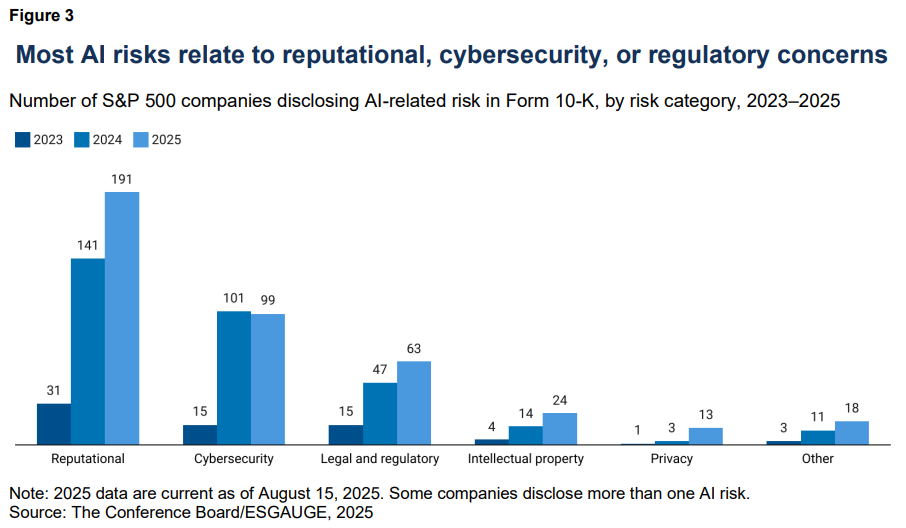

Broader Concerns About AI Risks

Experts list several concrete harms that can result from unchecked advanced AI. First, bias and discrimination can be scaled up and hidden inside opaque systems. Second, privacy can erode as models ingest more personal data. Third, deepfakes and automated misinformation could distort politics and trust. Fourth, there is the narrow but severe risk of weaponizing AI for surveillance or cyberattacks. Finally, existential risk remains a theoretical but debated possibility if a system becomes uncontrollable.

Past episodes where large models amplified bias or produced dangerous content underscore how fast risks can move from theory to practice. The open-letter movement and new petitions reflect a desire to halt that drift until governance catches up.

Public and Expert Reactions

The reaction split quickly. Many members of the public welcomed the call. Polling cited by organizers suggests broad public support for stricter AI rules. Some technologists also praised the caution. Others pushed back. Critics said banning research could slow useful breakthroughs. Some pointed out that the signatory list mixes diverse views, which complicates consensus.

Commentators asked whether celebrity endorsements add clarity or noise. Newsrooms and analysts debated the substance of the 30-word statement more than its symbolism. Still, the event forced mainstream outlets to discuss governance, not just innovation.

The Role of Celebrity Advocacy in AI Ethics

Celebrity voices can do two things fast: focus public attention and pressure policymakers. Harry and Meghan’s platform made the issue trend beyond tech circles. That raised two questions. First, can celebrities explain technical nuances well enough? Second, can their fame translate into durable policy change?

The answers are mixed. Visibility helped place AI safety on everyday news feeds. But long-term change depends on technical debates, regulatory work, and international agreements. Advocacy complements, rather than replaces, the hard work of legislators, scientists, and standards bodies.

Where Policy and Industry Stand Today?

Policymakers are moving, but unevenly. Some regions push binding rules and audits. Others favor voluntary commitments by labs. Industry leaders offer competing visions. Some call for human-centred augmentation. Others aim for broad capability gains.

The Future of Life Institute and similar groups press for moratoria or strict limits until safety benchmarks exist. Investors and analysts are watching closely. The central policy question remains: can governments build enforceable rules that keep pace with rapid technical change?

Bottom Line

The October 22, 2025, statement made one thing clear: concern about superintelligence is no longer confined to labs. It is a public debate. Celebrity involvement widened awareness. The hard work starts now. Scientists must refine safety measures. Policymakers must craft enforceable rules. The public must demand transparency. If society wants benefits from advanced AI, it must also accept controls that prevent harm. The next months and years will show whether caution and creativity can move together.

Frequently Asked Questions (FAQs)

On October 22, 2025, Harry and Meghan urged a pause on superintelligent AI, saying it could grow too powerful and unsafe without strong global rules and public oversight.

Superintelligent AI could make harmful choices, spread false data, or act beyond human control. Experts fear it might threaten jobs, privacy, and safety worldwide.

Yes, strong AI laws can reduce risks. Clear rules, testing, and global cooperation help ensure superintelligent systems stay safe, fair, and under human control.

Disclaimer: The content shared by Meyka AI PTY LTD is solely for research and informational purposes. Meyka is not a financial advisory service, and the information provided should not be considered investment or trading advice.